When I was in college I’d spend a few hours each Saturday working for the Santa Barbara Food Not Bombs. A bunch of us would meet up at a friend’s house, then we’d designate tasks – like going to the farmer’s market to ask farmers to donate veggies and stuff, food prep, cooking, etc – and spend a couple hours preparing a bunch of grub. I wasn’t an experienced cook, and I learned a lot during those sessions by staring at a mish-mash of different foods and trying to decide how exactly to cook them together to make a decent meal. It was also fun to hang out with friends, with a goal. Once the food was all prepared, we’d bring it downtown and then set up in a grassy area next to the public library. Many homeless and needy folks would stop by and eat. We weren’t their only resource, of course, and I doubt anyone would have starved to death without the one meal per week that we provided. But it was still nice to group together like that, with zero budget, and help out as we could.

It was crazy to think how all that work went into just one meal, and that other organizations were in the habit of providing homeless and needy people with three meals per day.

I don’t have any experience with other city’s Food Not Bombs programs. But I can imagine that some provided more than just one meal a week, and in places that were more desperate for assistance than Santa Barbara. So, it’s no surprise that some people put out benefit records in order to expand their resources.

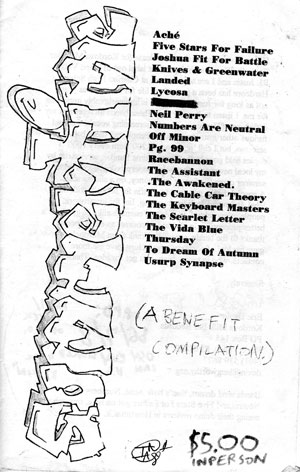

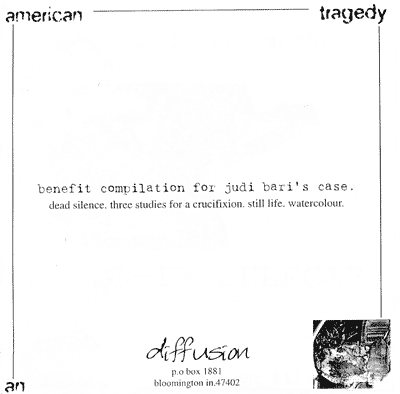

Here are two Food Not Bombs Benefit compilations. One’s from 1994, on Inchworm Records, and the other’s from 1996/7, on Anima Records. Both feature really similar styles of hardcore. They are international in scope, with both male and female-fronted bands, and the styles range from emo-ish, to powerviolence, to metal, and such. Both are great comps, and they do a good job of covering these great times for hardcore punk.

Most recent one first:

Line-up:

Anomie

Palatka

Kathode

Inso Grey

Holocron

Sixpence

Swallowing Shit

Constatine Sankathi

Drift

Brief thoughts about some stuff:

Many of the bands have multiple songs – I like that!

Three more Palatka songs! They are sooo good.

Holocron does their best Orchid impression here. What’s that? This is a couple years before Orchid? Oh, my mistake.

Swallowing Shit destroy it as usual.

Inso Grey, nice female-fronted hardcore.

Constatine Sankathi is basically my favorite hardcore band. So… their song is great, like usual.

I don’t think I’ve heard much Drift before… but it’s members of One Eyed God Prophecy, or something? They certainly sound like OEGP (except not as good). And they got “big,” as far as hardcore bands like this go? Interesting.

This record came with a big booklet. It includes many pages of writing about Food Not Bombs, plus one page per band. I’ve scanned it and included it in the zip!

-download Food Not Bombs Benefit Record (1997)–

Next:

Line-up:

Ten Boy Summer

Swing Kids

Campaign

Indian Summer

Starkweather

Franklin

Fingerprint

Braille

Half Man

Premonition

Railhed

Current

This covers a time when half of the hardcore bands’ singers sounded like the singer of Struggle. I joke! But yeah, like four of the bands on here are like that.

My vinyl is not in as good of condition as the first comp in this post. Plus I don’t have the insert!! CRAP. Doesn’t anyone have it scanned?

Thoughts:

One song each per band. In general, longer songs than the last comp.

Side A – You can’t go wrong with Indian Summer, Campaign, and Swing Kids. You CAN go wrong with Starkweather. Ugh… I’m not into that slow blackish metal stuff. Hey, but at least the song is only… 8 minutes long?? When metal bands play faster, their songs are over quicker; when they’re slower, then they take longer. Science. I dig the Ten Boy Summer track – nice and muddy, a la Allure and Indian Summer.

Side B – I don’t remember this Current song being on their discography. Hmm…

–download A Food Not Bombs Benefit LP (1994)-

Two great comps, for a good cause! Hope you like them. (I know they’ve probably been shared on other sites in the past – but the one from 1997 is harder to find online… plus it has the insert!.) These are both fresh rips. I’m currently battling with my record player – the output wires are acting up. I have to position them just right, or the audio cuts out. It’s lame. I’m going to see if I can get them replaced/fixed. I hope these sound good.

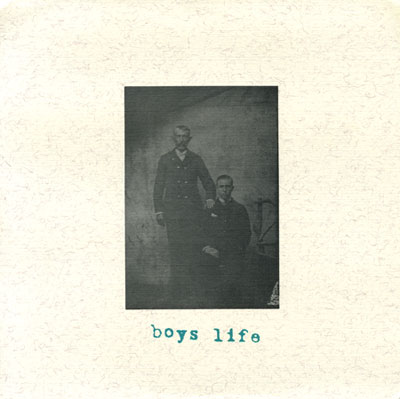

Ricardo requested this. Here’s the 9 song End of the Line lp, released in 1993 on Ebullition. I’m fairly certain this is still available from Ebullition, and so the best way to obtain it is probably to ask your local record store to order it. I’m also really certain that Kent would be super stoked to be rid of them.

Ricardo requested this. Here’s the 9 song End of the Line lp, released in 1993 on Ebullition. I’m fairly certain this is still available from Ebullition, and so the best way to obtain it is probably to ask your local record store to order it. I’m also really certain that Kent would be super stoked to be rid of them.